- Accueil

- Centre d'aide

- Comment connecter Chatbox à un service Ollama à distance : un guide étape par étape

Comment connecter Chatbox à un service Ollama à distance : un guide étape par étape

You can now run many open-source AI models right on your computer or server. Running models locally has several benefits:

- Complete privacy and security with offline operation

- No API costs or fees - completely free to use

- No internet lag or connection issues

- Full control over model parameters

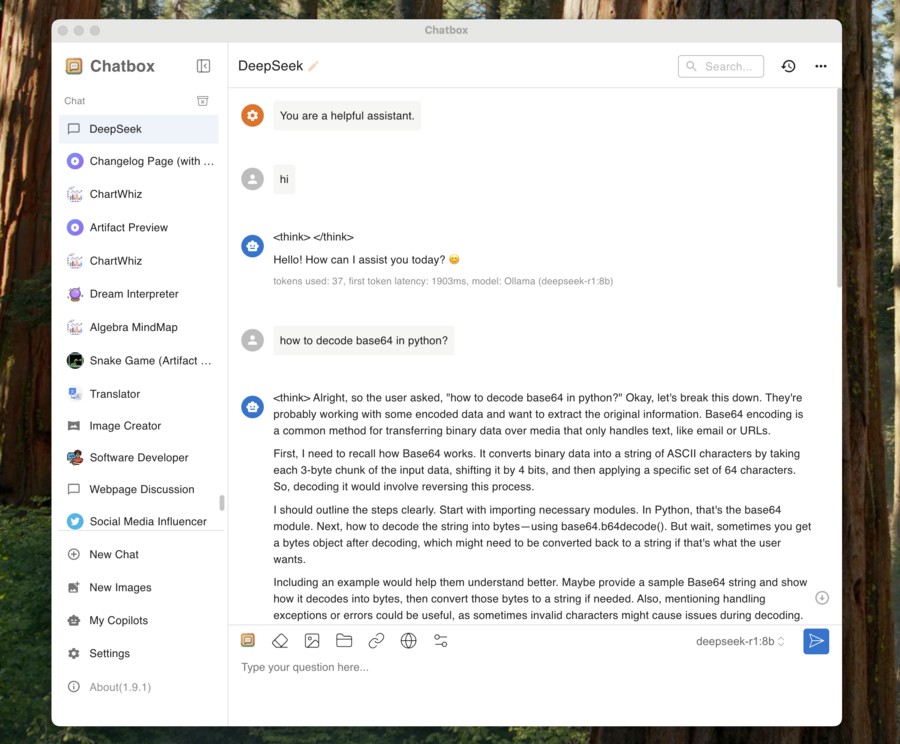

Chatbox works great with Ollama, giving you access to powerful features like Artifact Preview, file analysis, chat management, and custom prompts - all with your local models.

(Note: Local models require adequate hardware resources (RAM and GPU). If you experience performance issues, try adjusting the model parameters to reduce resource usage.)

Install Ollama

Ollama is a simple tool that lets you download and run open-source AI models like Llama, Qwen, and DeepSeek on Windows, Mac, or Linux.

Get Started with Local Models

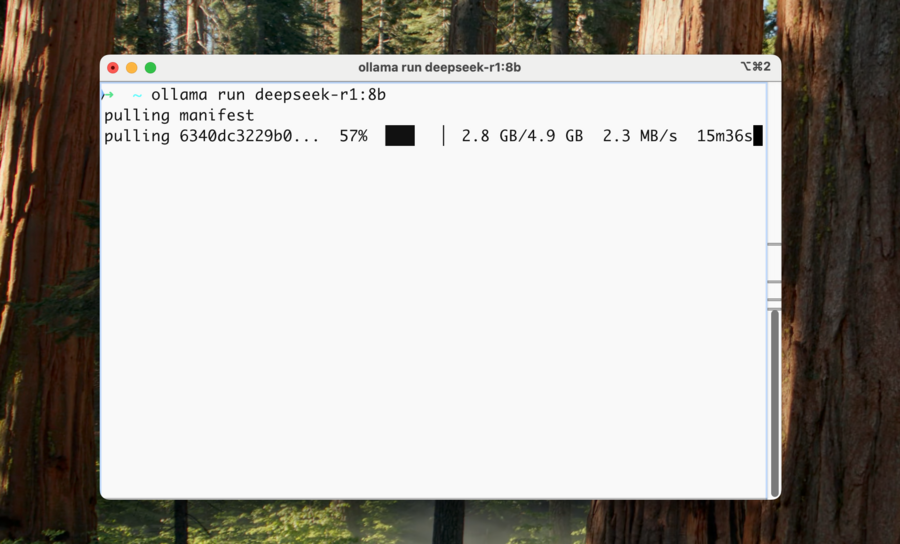

Once you've installed Ollama, open your terminal and run a command to download and start a model. Check out all available models at Ollama's model hub.

Example 1: Run llama3.2

ollama run llama3.2

Example 2: Run deepseek-r1:8b (Note: This is a distilled version of DeepSeek R1)

ollama run deepseek-r1:8b

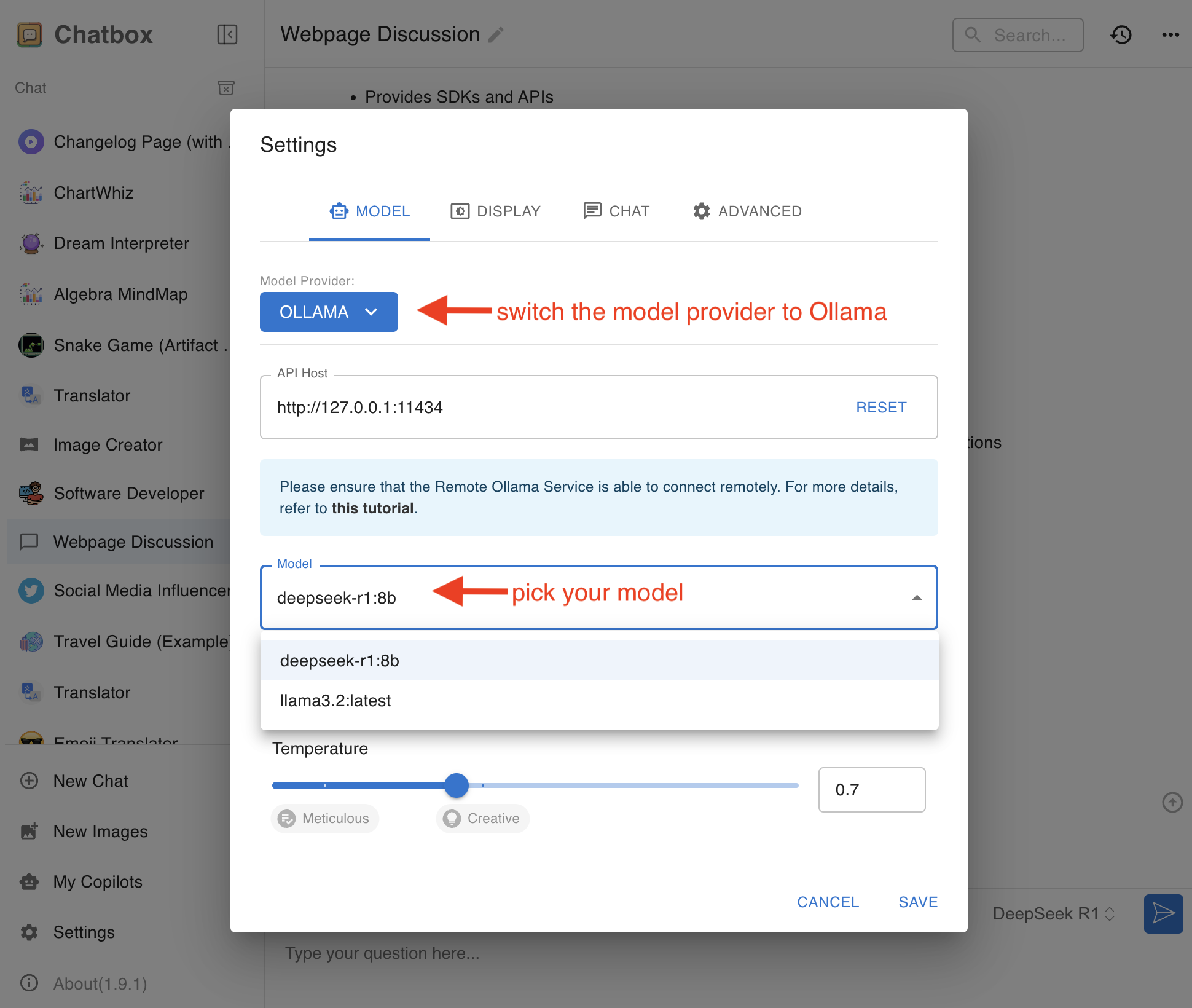

Using Ollama with Chatbox

Just open Chatbox settings, pick Ollama as your model provider, and select your running model from the dropdown.

Hit save and you're ready to chat!

Connect to Remote Ollama

Chatbox also supports connecting to Ollama services on remote machines, in addition to local connections.

For instance, you can run the Ollama service on your home computer and access it using the Chatbox application on your phone or another computer.

You need to ensure that the remote Ollama service is properly configured and exposed on the current network so that Chatbox can access it. By default, the remote Ollama service requires simple configuration.

How to Configure the Remote Ollama Service?

By default, the Ollama service runs locally and does not serve externally. To make the Ollama service available externally, you need to set the following two environment variables:

OLLAMA_HOST=0.0.0.0

OLLAMA_ORIGINS=*

Configuring on MacOS

-

Open the command line terminal and enter the following commands:

launchctl setenv OLLAMA_HOST "0.0.0.0" launchctl setenv OLLAMA_ORIGINS "*" -

Restart the Ollama application to apply the settings.

Configuring on Windows

On Windows, Ollama inherits your user and system environment variables.

-

Exit Ollama via the taskbar.

-

Open Settings (Windows 11) or Control Panel (Windows 10), and search for "Environment Variables".

-

Click to edit your account's environment variables.

Edit or create a new variable OLLAMA_HOST for your user account, with the value 0.0.0.0; Edit or create a new variable OLLAMA_ORIGINS for your user account, with the value *.

-

Click OK/Apply to save the settings.

-

Launch the Ollama application from the Windows Start menu.

Configuring on Linux

If Ollama is running as a systemd service, use systemctl to set the environment variables:

-

Invoke

systemctl edit ollama.serviceto edit the systemd service configuration. This will open an editor. -

Under the [Service] section, add a line for each environment variable:

[Service] Environment="OLLAMA_HOST=0.0.0.0" Environment="OLLAMA_ORIGINS=*" -

Save and exit.

-

Reload systemd and restart Ollama:

systemctl daemon-reload systemctl restart ollama

Service IP Address

After configuration, the Ollama service will be available on your current network (such as home WiFi). You can use the Chatbox client on other devices to connect to this service.

The IP address of the Ollama service is your computer's address on the current network, typically in the form:

192.168.XX.XX

In Chatbox, set the API Host to:

http://192.168.XX.XX:11434

Considerations

- You may need to allow the Ollama service port (default 11434) through your firewall, depending on your operating system and network environment.

- To avoid security risks, do not expose the Ollama service on public networks. A home WiFi network is a relatively safe environment.